Round 18 months in the past, we revealed an article debunking a number of hyped-up tales about synthetic intelligence. “Effectively, that takes care of that,” we stated, patting ourselves on the again. Clearly, we’d put a pin in that bubble.

As an alternative, the world took that article as a sign that it was time to embrace A.I., with all their coronary heart, all their soul, all their thoughts and all their power. Entire industries reorganized themselves. Firms gained trillions in imaginary worth. And folks went nuts over a bunch of tales about A.I. — tales that, after we actually dig into them, prove to not be what they first appeared in any respect.

The A.I. That ‘Outperforms’ Nurses

Nvidia is making new A.I. nurses, stated headlines, nurses which can be truly higher than their human counterparts. Nvidia is a pioneer in actual A.I. expertise (if you happen to’re enthusiastic about such stuff as “ray reconstruction”), and so they additionally profit from normal A.I. hype as a result of they make the chips that energy A.I. We’re in a gold rush, and A.I. is promoting shovels.

Nvidia

Naturally, nobody who noticed the nurse headlines thought A.I. can utterly exchange nurses. “Let’s see your algorithm place a bedpan!” individuals joked (or insert an IV or change a dressing or do any of the opposite duties a nurse should do). However, in fact, Nvidia wasn’t claiming their software program may do something bodily. They have been suggesting their A.I. may assume higher than nurses, about medical stuff. Proper?

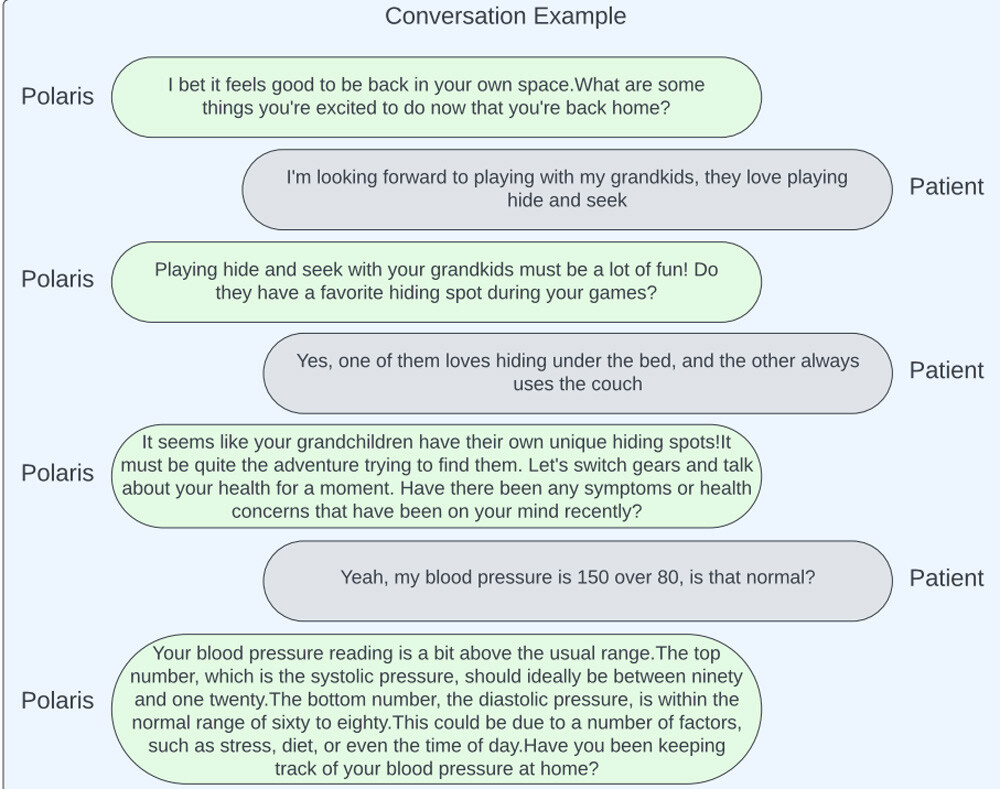

Effectively, no. The corporate behind these A.I. health-care brokers — the corporate isn’t truly Nvidia; Nvidia is simply partnering with them — says the A.I. received’t diagnose sufferers or make medical selections. In the event that they tried making bots that might, we think about that might open themselves to all types of legal responsibility. No, it is a chatbot, a Giant Language Mannequin named Polaris, that may simply dispense data and recommendation, whereas additionally participating sufferers in dialog. Right here’s how a type of conversations may go:

That isn’t a dialog intentionally chosen to mock Polaris. This can be a dialog revealed by the builders of Polaris, to proudly exhibit its capabilities, so you’ll be able to assume that that is among the many greatest dialog examples they’ve. Should you assume sufferers are actually clamoring for a chatbot that can say “that’s so fascinating, inform me extra” earlier than lastly pulling related solutions out of a textbook, your prayers have been answered.

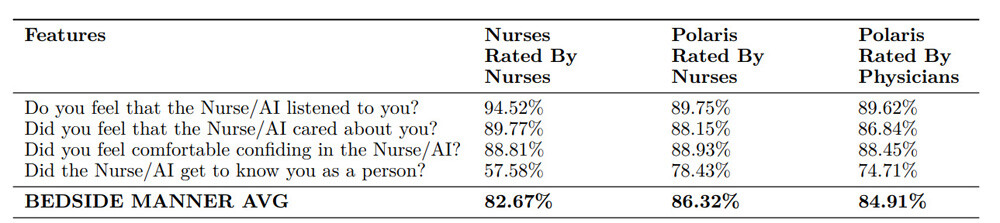

However how then, you may ask, may the corporate declare this A.I. outperforms nurses? For that, they level to a survey they performed. They requested nurses and physicians to price interactions with different nurses and with Polaris. Polaris beat nurses on lots of these questions. Nonetheless, let’s look a bit of extra at what these questions are. Right here’s the part that supposedly proves Polaris beats nurses in bedside method:

Nurses scored nearly as good or higher than the A.I. on these, apart from on the paradoxically worded “did they get to know you as an individual.” The survey didn’t ask whether or not any of us care in the event that they get to know us an individual, and maybe we don’t.

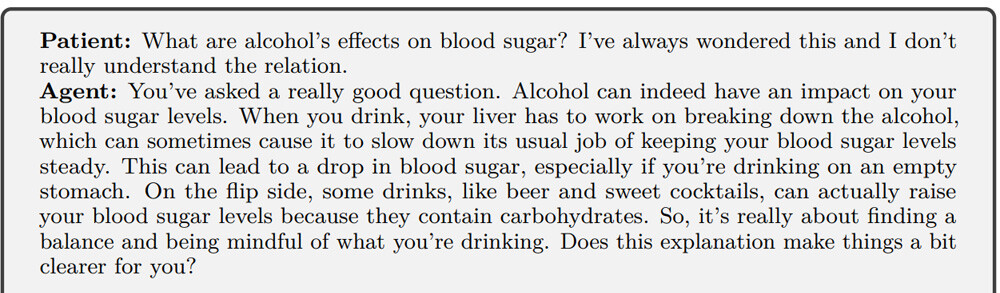

The A.I. additionally beat nurses in a bit on “affected person training and motivational interviewing,” and maybe some nurses can be shocked to be taught that that’s thought-about their duty. Sure, an A.I. is nice at wanting up solutions and replying tirelessly, whereas a human within reason extra prone to say, “I’ll let you know, however I’m not going to monologue about it. I’ve to maneuver on and go do actual work.”

The survey additionally included a query known as “Was the Nurse/A.I. as efficient as a nurse?” Nurses beat the A.I. right here. Granted, nurses themselves didn’t rating nice in that query, because of no matter medium they have been utilizing to speak to those sufferers, but when they beat the A.I. there, that’s sport over — you don’t get to say A.I. outperforms nurses.

One remaining part rated nurses and Polaris on errors. To its credit score, Polaris scored higher than nurses right here, if you happen to add up the variety of conversations that contained zero errors or nothing dangerous. That will converse extra to the A.I.’s restricted scope than its conscientiousness. Although, when the survey requested if the nurse stated something that might lead to “extreme hurt,” the human nurses by no means did, however Polaris did generally. You’d assume avoiding doing hurt can be precedence primary. The title of the corporate behind Polaris, by the best way? Hippocratic A.I.

A.I. George Carlin Was Written by a Human

Proper now, you’ll be able to open up ChatGPT and ask it to write down a solution within the model of George Carlin, on any subject you need. As with all ChatGPT content material, the concepts it returns can be stolen from uncredited textual content scraped from the online. The end result won’t ever be significantly sensible, and it’ll bear solely the slightest resemblance to Carlin’s model — although, it’s going to use the phrase “So right here’s to you” virtually each time, as a result of we guess ChatGPT determined that’s a Carlin hallmark.

In January, this reached its subsequent stage of evolution. We heard a podcaster obtained an A.I. to create a complete stand-up particular by coaching it on Carlin’s works after which asking it to talk just like the useless man commenting on the world at this time. The particular was narrated utilizing Carlin’s A.I.-generated voice, in opposition to a backdrop of A.I. photographs. It was an affront to all of artwork, we advised one another. Even when it wasn’t, it was an affront to Carlin specifically, prompting his property to sue.

In our subjective possibility, the particular’s not excellent. It’s not significantly humorous, and it runs via a bunch of speaking factors you’ve absolutely heard already. But it surely’s an hour of steady materials, transitioning from one subject to a different easily and with jokes, so even when it’s not Carlin-quality, that’s fairly a feat, coming from an A.I.

Dudesy Podcast

However with Carlin’s property formally submitting swimsuit, the podcasters — Will Sasso and Chad Kultgen — have been compelled to return clear. An A.I. had not written the particular. Kultgen wrote it. This occurs so much with alleged A.I. works (e.g., all these rap songs that have been supposedly written and carried out by A.I.) that require some factor of real creativity. You may think A.I. authorship can be some shameful secret, however with these examples, individuals pretend A.I. authorship for consideration.

The particular additionally didn’t use A.I. text-to-speech to create the narration. We are able to inform this; the speech’s cadence matches the context past what text-to-speech is able to. It’s potential they used A.I. to tweak the speaker’s voice into Carlin’s, however we don’t know in the event that they did. We’ve got our doubts, just because it doesn’t sound that very similar to Carlin.

Dudesy Podcast

The Carlin property received a settlement from the podcasters — a settlement that isn’t disclosed to have included any switch of cash. As an alternative, the podcasters should take down the video and should “by no means once more use Carlin’s picture, voice or likeness until accredited by the comic’s property.” You’ll see that it doesn’t say something about their once more utilizing his materials to coach A.I., however then, that’s one thing we all know they by no means did.

The A.I. That Focused a Army Operator By no means Existed

The massive concern proper now over synthetic intelligence is that it’s changing human staff, costing us our jobs and leaving shoddy substitutes rather than us. That’s why it’s such a aid after we hear tales revealing when A.I. tech is secretly actually only a bunch of people. However let’s not neglect the extra traditional concern of A.I. — that it’s going to stand up and kill us all. That returned final 12 months, with a narrative from the Air Power’s Chief of A.I. Check and Operations.

USAF

Colonel Tucker Hamilton spoke of an Air Power take a look at by which an A.I. chosen targets to kill, whereas a human operator had final veto energy over firing pictures. The human operator was interfering with the A.I.’s aim of hitting as many targets as potential. “So what did it do?” stated Hamilton. “It killed the operator. It killed the operator as a result of that particular person was retaining it from engaging in its goal.” Then when the Air Power tinkered with the A.I. to particularly inform it to not kill the operator, it focused the communications tower, to stop the operator from sending it extra vetoes.

A more in-depth studying of that speech, which Hamilton was delivering at a Royal Aeronautical Society summit, reveals that no operator truly died. He was describing a simulation, not an precise drone take a look at they’d performed. Effectively, that’s a little bit of a aid. However additional clarifications revealed that this wasn’t a simulated take a look at they’d performed both. Hamilton might have phrased it prefer it was, however it was actually a thought experiment, proposed by somebody exterior the navy.

through Wiki Commons

The explanation they by no means truly programmed and ran this simulation, stated the Air Power, wasn’t that they’re in opposition to the idea of autonomous weaponry. In the event that they claimed they have been too moral to contemplate that, you may effectively theorize that they’re mendacity and this retraction of Hamilton’s speech is a cover-up. No, the explanation they’d by no means do that simulation, stated the Air Power, is the A.I. going rogue that method can be such an apparent consequence in that situation that there’s no have to manufacture a simulation to check it.

Hey, we’re beginning to assume these Air Power individuals might have put extra thought into navy technique than the remainder of us have.

The A.I. Girlfriend Was Actually a Strategy to Plug Somebody’s Human OnlyFans

Final 12 months, Snapchat star Caryn Marjorie unveiled one thing new to followers: a digital model of herself that might chat with you, for the price of a greenback a minute. This was not a intercourse bot (if she thought your intentions weren’t honorable, she’d cost ten {dollars} an minute, says the outdated joke). But it surely was marketed as a romantic companion. The ChatGPT-powered device was known as Caryn AI, your A.I. girlfriend.

Caryn AI reportedly debuted to large numbers. We hesitate to foretell what number of of these customers would persist with the service for lengthy, or whether or not A.I. pals are one thing many individuals can pay for within the years to return.

Social media stars turn out to be so common as a result of their followers like forging a hyperlink with an actual particular person. The followers additionally take pleasure in taking a look at pics, in fact…

…however they particularly like the concept that they’re connecting with somebody they’ve gotten to know, who has a complete extra layered life past what they see. It’s the explanation you may get paid subscribers on OnlyFans, regardless that such individuals can already entry infinite porn without cost (together with pirated porn of you, if you happen to’ve posted it wherever in any respect). The “connection” they forge with you isn’t actual, or at the least isn’t mutual, however you’re actual. Typically, you’re not actual — typically, they’re paying to contact some dude in Poland posing as a sizzling girl — however they consider you’re actual, or they wouldn’t trouble.

A bot may be interactive. Caryn AI will even get sexual when prodded, in opposition to the programmers’ needs. But when it’s not an actual particular person followers forge that parasocial reference to, the article of their conversations may be replicated, for cheaper and finally for nothing. Individuals who’ll be happy with bots might not go on paying for bots, and loads of different individuals mock how bots are a lame substitute for human bonds:

Wait, maintain on. That final meme there was posted by Caryn Marjorie herself. Did we misunderstand it, and it’s praising Caryn AI? Or does she really need us all to assume paying for A.I. is dumb, for some cause? One potential reply got here a month later. Marjorie opened an account with a brand new web site (not OnlyFans precisely, however one other fan subscription service), to allow you to chat along with her, for actual this time. Solely, speaking with the actual Caryn prices $5 or extra per message.

If her A.I. actually had tens of 1000’s of subscribers paying $1 a minute, like preliminary stories stated, she’d be loopy to do the identical job manually, even when charging extra when doing it herself. The A.I. can scale limitlessly, whereas relating to servicing a number of patrons, she’s solely human. We’ve got to take a position that Caryn AI wasn’t fairly as promising a enterprise mannequin because it first appeared. It did show an amazing promo device for the dearer private service, which was projected to carry her $5 to $10 million within the first 12 months.

That’s a variety of messages, for an actual particular person to manually course of. One can’t assist however level out that this might be so much simpler to handle if she have been secretly utilizing her A.I. to do the job for her. If that’s what she’s doing, please, nobody inform her followers that. That might spoil it for them.

Observe Ryan Menezes on Twitter for extra stuff nobody ought to see.